Mapping link value through graphs and weights

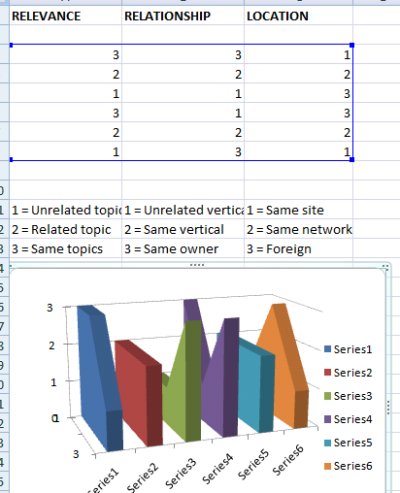

I was looking at a paper that discusses three-dimensional graphing for the World Wide Web and it occurred to me that people might find value in graphing their backlinks. Creating such graphs requires doing research and recording data, but there would be value in looking at snapshot comparisons of your links according to a weighting scheme of your own creation. For a quick example, I created a 3-dimensional graph in Excel but to be honest I would not recommend using Excel for anything other than 2-dimensional graphs. Also, if you evaluate a lot of links together, your charts will become unwieldy, so if you know of software that allows you to chart plot-points rather than simple bar and line charts, that might work better for you.

What you want to do is define your own link weighting algorithm. You don’t have to use charts to evaluate links this way. Once you set a baseline for your linking strategies you’ll be able to dispense with the charts, but a chart can show you how well you’ve succeeded in building a powerful, diverse linking profile.

To create a 3-dimensional chart you need to work with ordered triplets. And ordered triplet is simply three values (I will call them terms) placed in a vector (x, y, z). The vector’s terms don’t have to be plot-points on an X,Y,Z graph but that’s obviously what you would want to create a 3-dimensional representation.

A link weight score can simply be the sum of the vector’s terms: x + y + z. You could also average the terms, or assign weighting co-efficients to each term to adjust their computational values.

In the chart above I selected three valuations: Relevance, Relationship, and Location. To keep the computations (and chart) simple I went with three simplistic distributions:

Relevance

- Unrelated topic - Obviously, if a link to your document appears in a document that covers a completely dissimilar topic from your own, the link is less likely to bring interested visitors to your document.

- Related topic - This means the linking document’s topic is similar to your own but not quite the same. The other document could be an article on pet care and your article could be about dog grooming.

- Same topic - This should be self-explanatory. In this model I assign the highest value to strong relevance, but you can use a different valuation scale.

Relationship

- Unrelated vertical - This is a site-specific factor, not a document-specific factor. So you can have a page about dog grooming on your SEO blog, but if the pet care page that links to your dog grooming page is on a pet care site, that’s an unrelated vertical.

- Same vertical - This would be like SEO site linking to SEO site, even if the linking article and destination article are about different topics.

- Same owner - In this model, ownership is more important than vertical. You can reverse the valuation for other models, obviously. There is no right or wrong about this.

Location

- Same site - In this model, links from sibling pages are not assigned much weight.

- Same network - In this model, links from other domains/hosts in the network are given more weight than sibling links.

- Foreign site - You don’t own this site, plain and simple.

The chart I created from the illustrative data I provided indicates the relative value of each link. The highest values are assigned to Series 1 and Series 4 (links 1 and 4), although their graph areas look very different. Link 1 is a sibling link, which (in this model) weakens or diminishes its value. Link 4 is from an unrelated vertical but it’s on a page with the same topic on a foreign site.

If we simply compare the Link Weight values (7, 6, 5, 7, 6, 5) we would see that all the links were stronger than the median value of 3.5. They would all be good links to have. But the graph gives us an indication of how diverse our strengths and weaknesses are. I would be concerned if a random sampling of real links very closely resembled any one of those chart objects. A diverse link profile should reveal itself in the derivative values you can create with charting tools.

An Average Link Weight comparison (2.3, 2, 1.7, 2.3, 2, 1.7) would be even less revealing about the diversity of the link profile, although it would agree with the overall relative strength of the links. 3 would be the highest value on the scale and none of the links have less than a 1.7 value.

You could sum the averages (12) or average the averages (2) or average the sums (4). Each computation would provide you with a number that could be recorded and compared to other link sets or to the same link sets over some period of time (this model, of course, implies a limited scope for change over any time frame).

Your value scale can be anything from Boolean (0,1) to Infinite, but you need to be consistent across all your term slots (your chart axes). Some people might argue you could set different limits for each axis but I think that would cause a flattening of 3-dimensional charts and it would certainly skew the averages.

Now, I know what many of you are thinking. In your private shame you still look at Google’s Toolbar PR value. You could certainly plug that data into a matrix and use it to value links, but it would be virtually worthless in this kind of computation. Google tells us that the Toolbar PR values are only updated occasionally, whereas internal PageRank fluctuates — perhaps on a daily or hourly basis. You’d be using an inaccurate static value to represent dynamic valuations.

In other words, if a document is assigned a TBPR value of 4 today, your Google Toolbar will report that same value for the next 3-4 months regardless of what changes occur in the document’s Google-acknowledged link profile. Today my PR 4 page may be more valuable than your PR 4 page. Tomorrow, your PR 4 page may be more valuable than mine.

Using Toolbar PR in a link weighting model will render your computations worthless. You need to use discrete, verifiable data for your calculations. Google can manually reduce Fred’s Toolbar PR for buying links, but that won’t change the fact that you don’t own his site, his documents are similar to your own, and he sends you a lot of traffic through his links.

You can (and should) determine the value of links on the basis of what you know about them: are they placed in prominent positions on a page? Are they embedded amidst many other links? Are they embedded in Javascript or nofollowed? Are they image versus text links? Is the anchor text in the same language as yours? Were they given by Fred naturally or did you ask for them? Did Fred put them there or did you or did someone else?

Every quality of a link can be quantified and measured. Your goal in capturing and organizing this data should not be to validate the Toolbar PageRank values but rather to help you identify the best possible linking resources at your disposal AND to ensure that you’re creating diverse, natural-seeming link profiles (technically, there is no such thing as a “natural” linking profile but that is a discussion for another day).

If there were a universal link weight standard people could have some pretty meaningful discussions without disclosing site URLs. Why? Because if you inflate your link weight values people would give you advice and feedback on the basis of link weight values that you don’t possess. You’d find their advice to be pretty much useless, so falsifying link weight values when seeking feedback would be a self-defeating proposition. There would always be some idiot who thinks he needs to hide his true link weight values, but most people would quickly see that a standard link weight value system protects privacy and gives everyone a more-or-less level playing field.

You don’t have to talk in terms of “example.com” versus “my widget site”. You can just say, “My link profile looks like” and people could respond by saying, “Well, that looks pretty good — maybe your problem lies elsewhere” or “that’s not a very good link profile — maybe you should work on your link strength and diversity”.

There is, of course, much more that could be said on this topic. I’ll come back to it again.

www.seo-theory.com

published @ September 15, 2008