A new landmark in computer vision

How did we do it? It wasn't easy. For starters, where do you find a good list of thousands of landmarks? Even if you have that list, where do you get the pictures to develop visual representations of the locations? And how do you pull that source material together in a coherent model that actually works, is fast, and can process an enormous corpus of data? Think about all the different photographs of the Golden Gate Bridge you've seen — the different perspectives, lighting conditions and image qualities. Recognizing a landmark can be difficult for a human, let alone a computer.

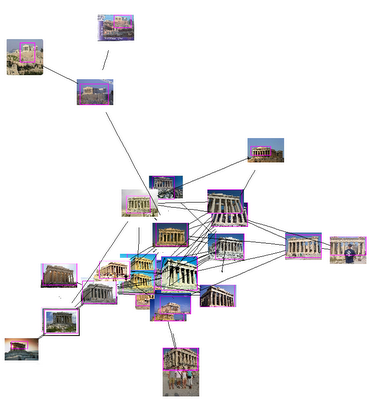

Our research builds on the vast number of images on the web, the ability to search those images, and advances in object recognition and clustering techniques. First, we generated a list of landmarks relying on two sources: 40 million GPS-tagged photos (from Picasa and Panoramio) and online tour guide webpages. Next, we found candidate images for each landmark using these sources and Google Image Search, which we then "pruned" using efficient image matching and unsupervised clustering techniques. Finally, we developed a highly efficient indexing system for fast image recognition. The following image provides a visual representation of the resulting clustered recognition model:

In the above image, related views of the Acropolis are "clustered" together, allowing for a more efficient image matching system.

While we've gone a long way towards unlocking the information stored in text on the web, there's still much work to be done unlocking the information stored in pixels. This research demonstrates the feasibility of efficient computer vision techniques based on large, noisy datasets. We expect the insights we've gained will lay a useful foundation for future research in computer vision.

If you're interested to learn more about this research, check out the paper.

Jay Yagnik, Head of Computer Vision Research

googleblog.blogspot.com

published @ June 22, 2009